Project objective

The project is about replacing the mouse with an eye-tracking bar (as the name might already suggest) to optimize one’s workflow. The goal is not to operate the computer using eye tracking alone. In our implementation, the eye-tracking bar must be used in combination with the keyboard. The idea is similar to how the text editor Vi is used — relying entirely on the keyboard without ever taking your hands off it. However, the advantage of eye tracking over Vi is that you don’t need to memorize 100 different shortcuts. Instead, you only need to learn around 5–8, while still keeping your hands on the keyboard at all times.

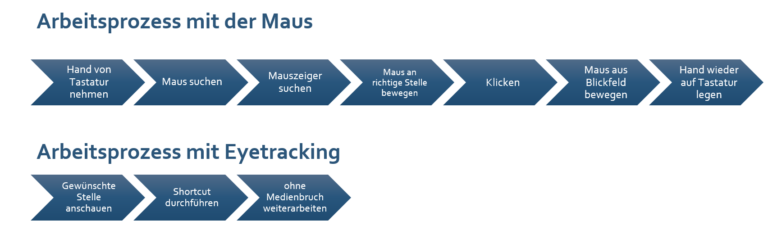

Comparison Between Working with a Mouse and with Eye Tracking

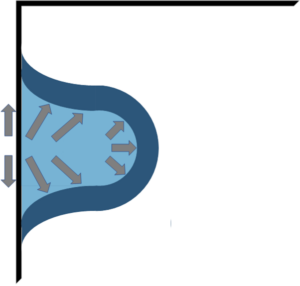

The illustration highlights how many more steps are required when working with a mouse compared to using eye tracking.

To complete the bachelor’s degree at HSW, every student must participate in a project study during the 5th and 6th semesters. The project study is typically provided by an external company, and students are required to organize themselves in order to meet the project’s requirements.

Lars Kölker, Lukas Hein, and I chose the project titled “Eye Tracking as a Replacement for the Mouse.”

Project history

The project is already five years old and had been worked on by previous students. In the past, a C# application was developed that reads data from a Tobii eye-tracking bar and converts them into mouse inputs using shortcuts. When we took over the project, the application already included functions for scrolling and zooming.

There is also a training and testing software (TuLs), which allows users to learn and test how to operate the eye-tracking bar in combination with our application. This software was especially important for the user studies conducted in previous phases of the project.

Training and Testing Software (TuLs)

Our goal

At the beginning of the project study, we were given three requirements:

Designing and developing a solution for the edge-case problem related to the magnifier tool

Implementing a new scrolling approach

Writing an academic paper

The Fisheye Magnifier

The fisheye magnifier is an important feature for operating the computer with the eye-tracking bar. It serves to compensate for the remaining inaccuracy of the eye tracker. A fisheye magnifier was chosen instead of a linear magnifier to provide a distortion-free zoom. The reason is that, similar to a mouse click, the magnifier cannot always be placed with 100% accuracy. A zoom with distortion could accidentally cover the area that is meant to be magnified.

The Edge Problem

One problem that still exists with the magnifier is its operation near the edge of the screen. When the magnifier is used close to the screen edge, part of the enlarged area may extend beyond the visible screen.

Fisheye Magnifier at the Edge

To counter this problem, we developed a concept for the fisheye magnifier at the screen edges. The idea is that the magnifier, when near the edge, behaves like a water droplet, gently hugging the edge of the screen.

Concept for the Teardrop Magnifier

However, this requires that the enlarged area can no longer be centered exactly on the gaze point but instead expands toward the center of the screen. This, in turn, creates another problem: the point the user is focusing on shifts abruptly to a new position. Such behavior can briefly disorient the user.

A solution to this issue is an animated magnifier that gradually grows to a predetermined size, providing a smoother and less jarring transition.

New Scrolling Approach

The current scrolling method in our application is similar to scrolling with the middle mouse button. To scroll, the scrolling mode must first be activated, then you can scroll by moving your gaze to the top or bottom edge of the screen. The drawback of this approach is that you cannot browse through a page while scrolling because the gaze movement is used to control the scrolling itself.

The new approach solves the problem of the current scrolling mode. Here’s how it works: The user selects a point of focus on the screen and presses one of two shortcuts. Depending on the shortcut pressed, the focus point is then moved to either the top or bottom edge of the screen. During scrolling, the user is able to scan through the page. This approach can be compared to using the “Page Up” and “Page Down” keys.

Writing a Paper

The final goal of our project study was to write a paper. Originally, the paper was supposed to focus on the new scrolling approach. However, it turned out that this topic had already been covered a few years ago by someone else. So, we had to come up with a new topic at short notice. We decided to focus on a new feature instead: the Auto-Magnifier.

The idea is: If our application detects that there are many clickable objects at the point of gaze, instead of registering a click, the magnifier should open. Two concepts have already been developed to detect when the magnifier should be activated:

Detection via edge counting

Detection via machine learning

Edge counting is a fairly simple solution. It simply counts how many enclosed blocks there are using an image processing library. Based on a fixed threshold, it then decides whether the magnifier should open or not.

The second concept is more complex. A machine learning algorithm is to be trained to count potentially clickable objects within an image section. To train such an algorithm, many test data sets with image sections and the corresponding number of clickable objects are required.

Conclusion

This blog post is just a brief summary of everything we actually covered and learned. We actually came up with cooler names for some of the individual features, but left them out here for simplicity’s sake.

All in all, Lars Kölker, Lukas Hein, and I chose a really fun and interesting project study. If you have any further questions about our project, feel free to reach out to us.